Security Tips for the Software Development Lifecycle

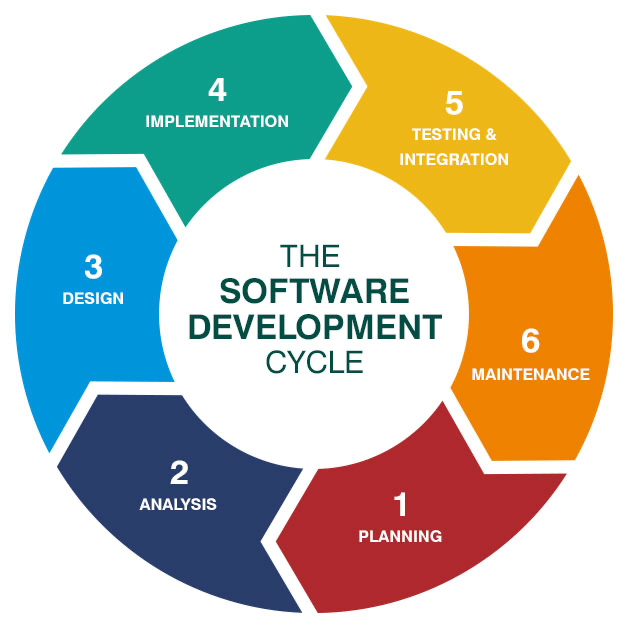

The software development life cycle is used to design and test software products, but each step has the possibility to introduce security risks.

The software development life cycle is a set of 6 steps used in the software industry to design and test high quality software products. It’s a very common framework used by developers, but each step has the possibility to introduce security risks into an application.

From a security point of view it’s important to understand what needs to be done at each step to avoid creating an application filled with bugs. It takes significantly longer to go back and fix these issues than it would if you do it correctly the first time. This article goes through each phase of the lifecycle and highlights the security related tasks for each step. If you follow these guidelines you will have a very secure application by the end of the process.

Planning and Analysis

This phase is about understanding the requirements for your application. This means understanding what functionality the application needs, how much resources are needed to complete the project, establishing deadlines and this includes understanding the security requirements for the application.

Outline Security and Compliance Requirements: This includes the technical and regulatory needs for your application. For the technical side this would mean things like making sure you have proper encryption for data in storage and data in transit. Regulatory requirements refers to understanding the type of data the software will store and transport and all the applicable laws around that. This means laws like the health insurance portability and accountable act (HIPAA) or Payment Card Industry Data Security Standard (PCI-DSS).

They mandate that certain customer’s information is collected, stored and transported in a secure way. So you need to be aware of all regulations that will impact your application.

Security Awareness Training: If you are working on a team of developers they need to be trained on the security practices relevant to the project. This ensures everyone understands the requirements set out for the application and reduces the amount of mistakes the team will make.

Audit Third-Party Software Components: Third party components are commonly used to develop software faster and add more features to an application. However, third party software can often introduce their own vulnerabilities to the application. According to Veracode about 90% of third-party code does not comply with enterprise security standards like the OWASP Top 10. It’s important that you audit any third party software that you plan to use.

Design

Now that you have the requirements for your application, the next step is to define and document the product requirements. Many times this is done through an SRS(Software Requirement Specification) document, this outlines all the product requirements to be designed and developed throughout the project.

Threat Modeling: This means understanding the probable attack scenarios for your application. A common example of this would be an SQL injection, which is where an SQL query is submitted into an input form to extract data from the database. Once you understand what the likely attacks against your application will be, you can design countermeasures to protect against them.

Ensuring Secure Design: Here you ensure the design of your application adheres to common best practices in software development. To get started this is a list of 10 best practices for securing software development.

Implementation

The Implementation phase is where you begin building the application. You also perform debugging by looking at the source code. The goal here is to create a stable and functional first version of the application.

Use Secure Coding Guidelines: In order to avoid making the same mistakes as others, it's worth while to do a little research into common insecure coding practices. This way you are sure to avoid making those mistakes when you are coding your products. CERT secure coding, a community based coding initiative put together a list of common coding mistakes found here. Keeping guidelines like these in mind as you code will keep you from making a lot of mistakes.

Scan the source code: There are tools called Static Application Scanning tools (SAST) that can scan your source code for vulnerabilities without needing to run the application. You can use these tools as you code to find bugs and fix them. There are many SAST tools out there but here are six SAST tools that you may want to consider using for your projects.

Manually review the code: Even with software tools to help you along the way, manual code reviews are still important. Automated scans won’t catch every mistake and still lack much of the human intelligence necessary for critical thinking. Once the first draft of an application is completed the source code should be manually reviewed by an expert knowledgeable on security bugs in software development.

Testing and Integration

This phase is dedicated to discovering and fixing bugs in the application. This stage involves testing the application at runtime, by using several different types of inputs and seeing if the application handles these inputs correctly.

Fuzzing and Dynamic Scanning: Dynamic Application Scanner Tools (DAST) test for vulnerabilities by simulating attacks during runtime. Fuzzing is an automated software testing technique that generates random, invalid or unexpected data as inputs into software. This is done to ensure that no matter what input a hacker feeds into your application, they will not be able to get around the security features that are in place. Some common attacks that user inputs are SQL injections and Cross Site Scripting (XSS). You can find some popular DAST tools Here.

Penetration Testing: A penetration test is an authorized, simulated cyber attack on your application to identify areas of weakness. This step is important to ensure that all of the planning and designs you created are truly effective. You can do this by hiring security professionals individually, through a company or you can use a bug bounty program to crowdsource this step.

Test Environment Decommissioning: Once a test environment is no longer needed it should be taken offline or deleted if it’s a VM. A test environment that is left online after a project is a common risk for many companies. It provides an unnecessary target for hackers and since no one is using it, it’s more likely than other computers not to be patched or updated. This means that any vulnerabilities that are found in its applications will remain there indefinitely. The best practice here is to tie the lifespan of the environment to the length of the project and make it possible for dev teams to request more time if necessary.

Maintenance

Once an application is released to its user it still needs to be maintained and have patches released to ensure the application is secure.

Allow for Feedback: Security researchers and hackers with good intentions are consistently looking at software for vulnerabilities. You should have a means for people to contact you if they find something wrong with your application. In many causes this feedback is completely free, you just need to have a means to get that information and then create a fix for it.

Ongoing Patching: As issues are found in the application, patches need to be made available for your users. This needs to continue for the full life of your application.

Ongoing Security Tests: Applications should be tested for security vulnerabilities at least once a year.

End of Life

End of Life refers to a software that is no longer supported by it’s developers. Meaning there are no more patches, updates or bug fixes. As part of the decommissioning process sensitive data needs to be removed and disposed of, unless there is regulatory requirement or business need to keep it.

Data Retention: Government and regulatory bodies define retention requirements for different types of data. It’s important that you confirm your retention requirements and be sure to store the data appropriately before getting rid of an application. You may also need to retain data for business purposes, this is acceptable but it needs to be documented who made that decision and for what purpose.

Data Disposal: At the end of an application's life all sensitive information should be securely deleted. This means all personal information, encryption keys or API access keys. If you retain information without a clear need for it and there is a data breach, you can be fined. The European Union’s GDPR privacy law has collected over $100 million euros in fines in the last 2 years. Here you can find some tips on disposing of data that is no longer needed.

Conclusion

The software development lifecycle is a widely popular template and each step has its own requirements for creating a secure application. This article highlights all of the key activities that need to be completed in each phase. Firstly, you need to plan the technical and regulatory requirements for securing your application. Next, you need to design your application’s infrastructure based on secure coding best practices. Following that you need to test the application you created. Here you will use automated scanning tools, manually code reviews by experts and lastly hiring professionals to test your application for flaws.

Once the application has gone live it needs to be regularly checked and patched as bugs are found. Once it’s life has ended the information you need to keep must be secured and the remaining information needs to be disposed of correctly to prevent that information from being leaked later on.